851

In machine learning, the Long Short-Term Memory (LSTM) model is mainly used in the processing of sequence data.

Understand the basic idea of Long Short-Term Memory (LSTM)

Today, processing sequence data is of great importance. Whether it’s speech recognition, text analytics or even stock price prediction, sequences form the basis for many things. This is where the Long Short-Term Memory (LSTM) model comes in, a special type of neural network that is perfectly suited for such tasks.

- Unlike conventional recurrent neural networks (RNN), an LSTM can store information over longer periods of time. This makes it particularly suitable for sequence data where past events are important.

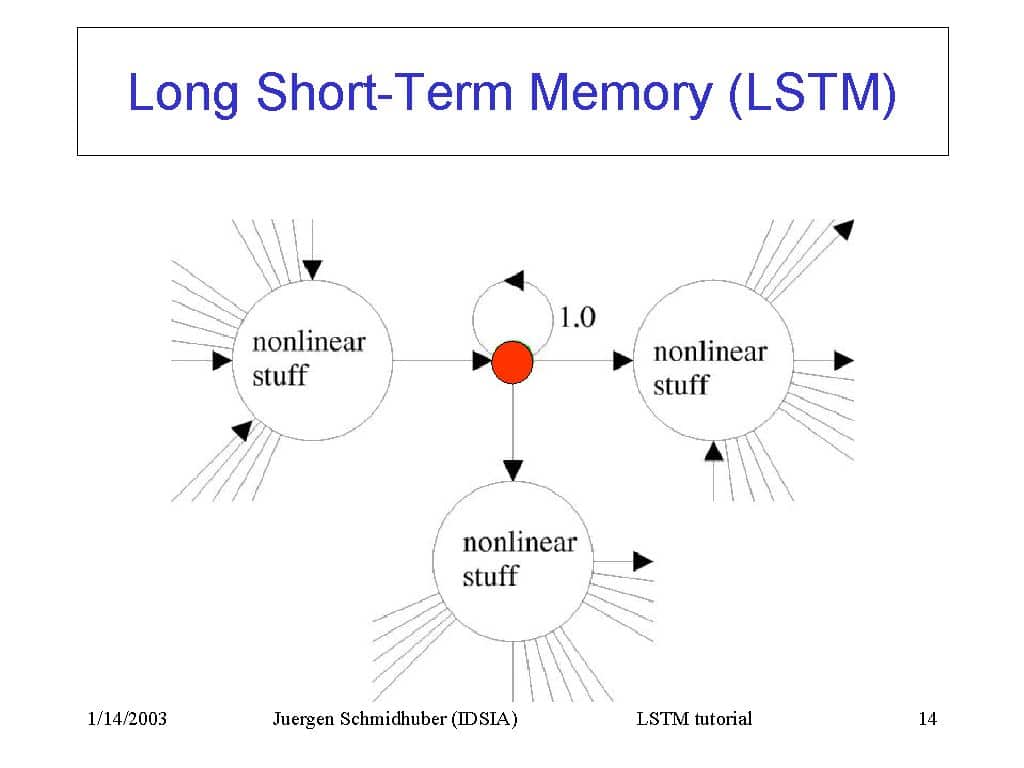

- An LSTM model consists of various “gates” that regulate the flow of information. These gates determine when information is forgotten, stored or retrieved.

- The cell state is at the heart of an LSTM. It acts as a kind of memory, storing information over long sequences.

- The forgetting gate decides what information to remove from the cell state. It uses the sigmoid function to determine which values are discarded and which remain in memory.

- This decides what new information about the state of the cell should be added. It consists of two parts: The sigmoid activation function, which decides which values to update, and the tanh function, which generates new candidate values.

Application and advantages of LSTM

LSTMs are able to capture long-term dependencies in sequences. This gives them a wide range of applications.

- LSTM models are excellent for predicting sequences, such as weather data or stock prices. Because of their memory and ability to use information going back a long way, LSTMs can capture complex relationships in the data.

- In NLP, LSTMs are often used for tasks such as text classification, named entity recognition and machine translation. They can better capture the context of texts and thus improve the quality of the results.

- LSTM can also be used in speech recognition to convert spoken words into text.

- The ability to capture long-term dependencies makes LSTMs ideal for applications where temporal relationships are important, such as music composition.